In my previous blog post I talked about the advantages of using a multi-account setup and AWS Organizations. There are many things to consider when you go in this direction.

How many accounts are needed? What structure should I use? What policies should I create? Who should have access to what?

In this blog post, I will cover what we did here in ST6 and why.

Our requirements

Before I walk you through our setup, let's begin by listing the requirements that drove our decision:

- We want to have our organization defined as code and use automation

- Our users should not need to juggle with multiple accounts and passwords so we want Single Sign-On (SSO)

- Different team members should have access to specific projects based on assignment

- Each user should have an individual sandbox account

- Project cost needs to be separate

- We want to have a central log archive

- We need to manage security and compliance in a centralized way

- We want the solution to scale well with the increase of projects and team members

Remember to think about what you want to achieve and why, before starting the implementation. You don't want to overcomplicate the setup.

Our solution

We evaluated our requirements and did some research after which we came up with a plan.

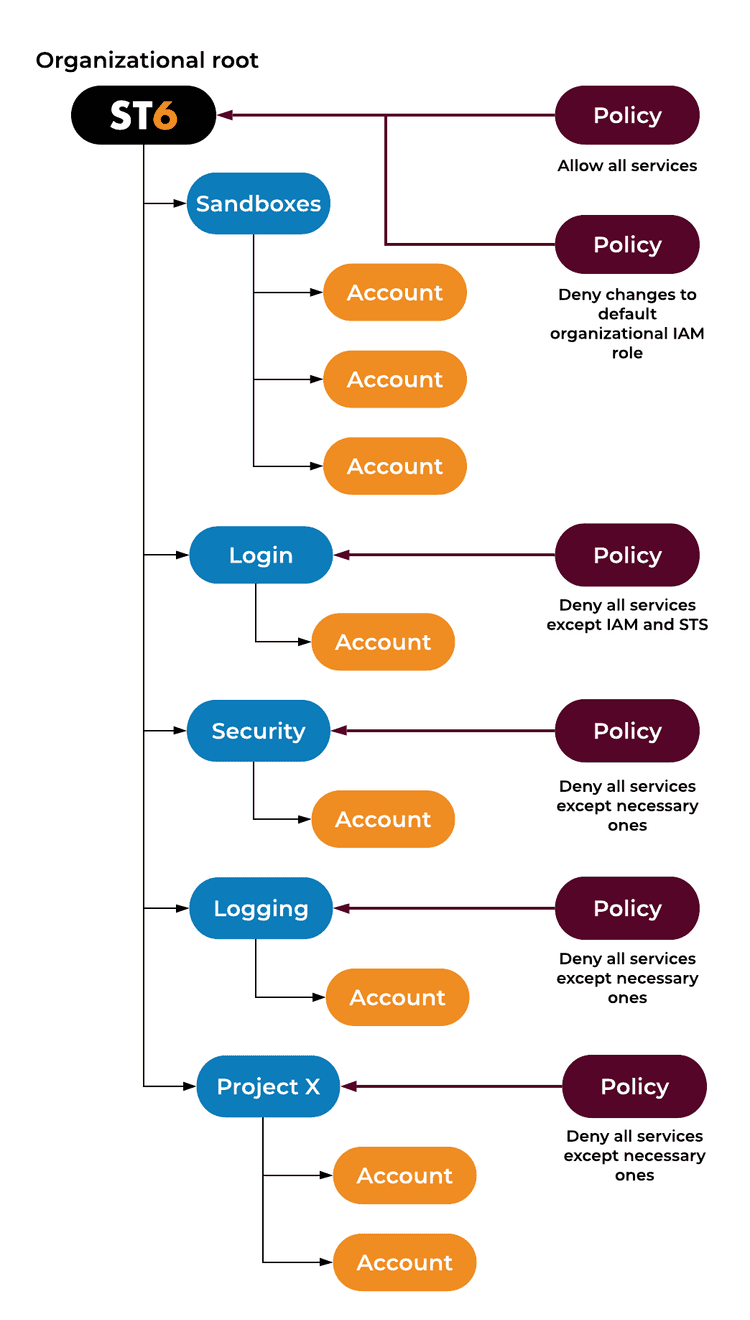

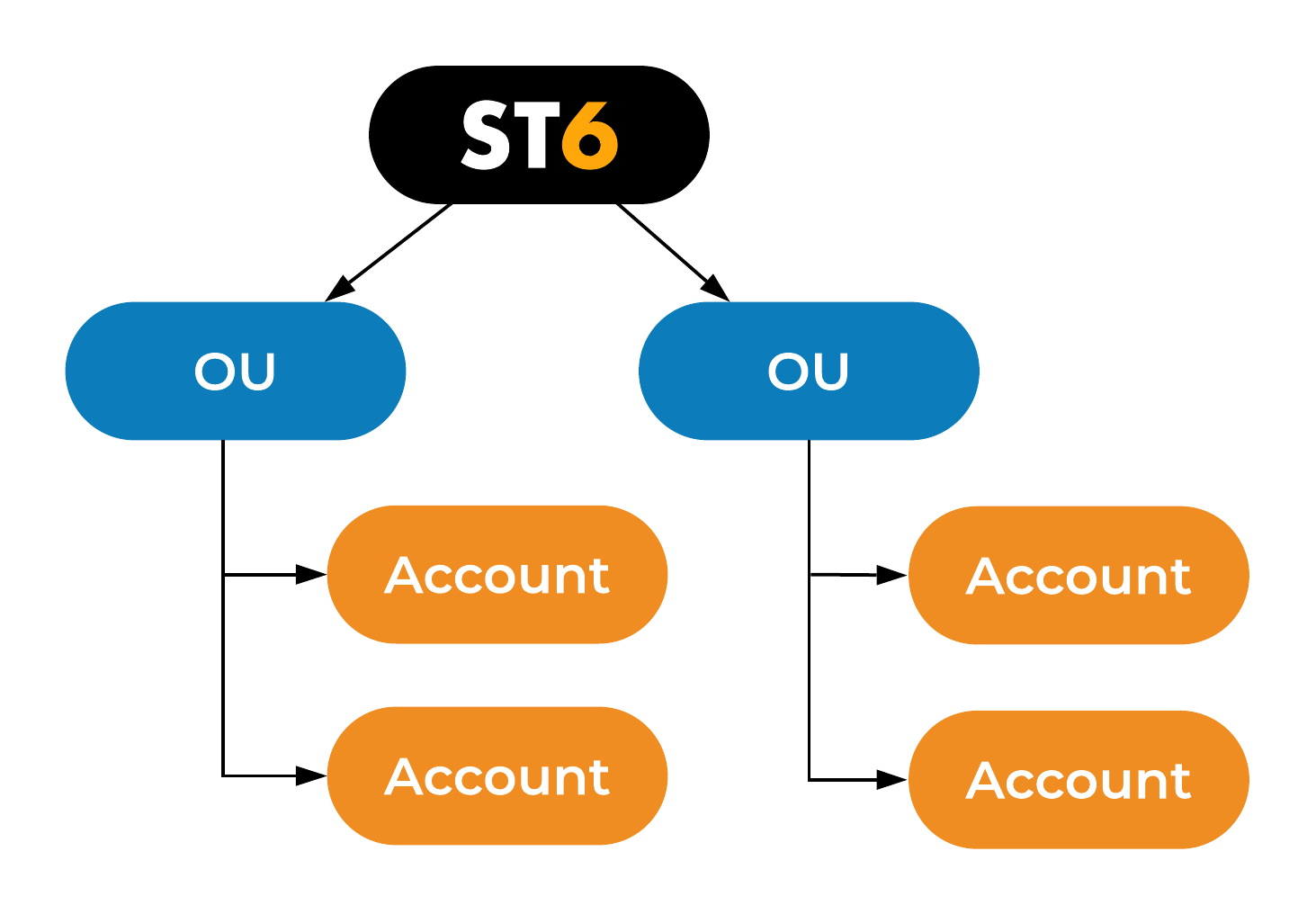

This is how our ST6 multi-account setup looks like

How did we get here?

Well, we evaluated our requirements, did some research, and arrived at this plan. Now, let's walk through our requirements one by one and see how this setup addresses each of them.

1. Support automation

In the previous blog post, we talked about AWS Organizations, AWS Landing Zone and AWS Control Tower. AWS Organizations helps us to build a multi-account organization. It already has an API so the only missing part is some sort of orchestration.

Enter AWS Landing Zone and AWS Control Tower. These solutions are the first ones that you should evaluate for this purpose. Unfortunately, both of them do not exactly address our needs. AWS Landing Zone is complex and due to the many moving parts of the solution, it's error-prone. Additionally, it's not easy to extend the solution if you need something that's not included. AWS Control Tower doesn't have an API and you can't use it for existing organizations (as is the case with ST6). To sum up, both AWS Landing Zone and AWS Control Tower, don't work for us.

So where does this leave us?

AWS Organizations has an API, which means that we can use some infrastructure provisioning tools such as AWS CloudFormation and HashiCorp's Terraform.

Let's look at CloudFormation first since it comes from AWS. It uses JSON or YAML syntax to represent the resources that you want to create, which is not ideal but we can live with that. In my personal experience, I haven't seen any bugs in CloudFormation. A surprising drawback is that many services that are supported by rival tools are missing here. And as you might have guessed AWS Organizations is not supported.

HashiCorp, the company behind Terraform, has gathered a huge traction in recent years and Terraform has been available for quite some time now. It uses HCL (HashiCorp Configuration Language) version 2 which is a derivate of JSON. It's easy to write and understand, and it's a lot shorter than CloudFormation's YAML and especially JSON. I have seen many bugs over the years and the fact that there still is no stable version available speaks for itself. Nevertheless, the tool is good and it gets the job done. An additional benefit of Terraform is that if you have an existing AWS Organization and you want to manage it with code, you can import the existing resources to your configuration.

Both CloudFormation and Terraform use declarative syntax (HCLv2 has some limited functionalities on top). Sometimes when you write configuration you wish that you had the power of a full-blown programming language.

To me, using the same programming language for application code and infrastructure is perfectly logical.

At ST6 we love DevOps. Оne DevOps technique is to have cross-functional teams. What better way to achieve this than to have all team members use the same tools and languages. So let's see what we can use that can help us. AWS Cloud Development Kit (AWS CDK) and Pulumi are tools that promise to deliver just that. Both of them provide the ability to abstract AWS resource provisioning using familiar languages like Python or JavaScript. Unfortunately, in our case, we can't use them because under the hood AWS CDK uses CloudFormation and Pulumi is free only for individual use. I'm sure that in future projects we will try these tools and share our experience.

Considering all of the above, we chose to go with Terraform.

2. Single Sign-On

Remembering many usernames and passwords is always hard. You can even say that it might lower your security as many people in such a position start to use weak passwords. At ST6 we want our team to focus on delivering value to customers and not trying to remember passwords when working in different AWS accounts.

There are many approaches to this and many of them need Active Directory. As a relatively small company, we are using G Suite. Perhaps in the future, we might need to switch to something else that relies on Active Directory. For the time being it would be perfect if we can use the same G Suite users for our AWS infrastructure.

You can set up federated access with G Suite, for more info see this AWS article. We don't like this solution as it requires a lot of manual work in the G Suite Admin Console and API. This approach is not very convenient if you use access keys often as you need to generate new ones when the session expires. It's not that apparent who has access and when they got it.

After some thought, we decided to have a central Login account (or Identity account if you wish).

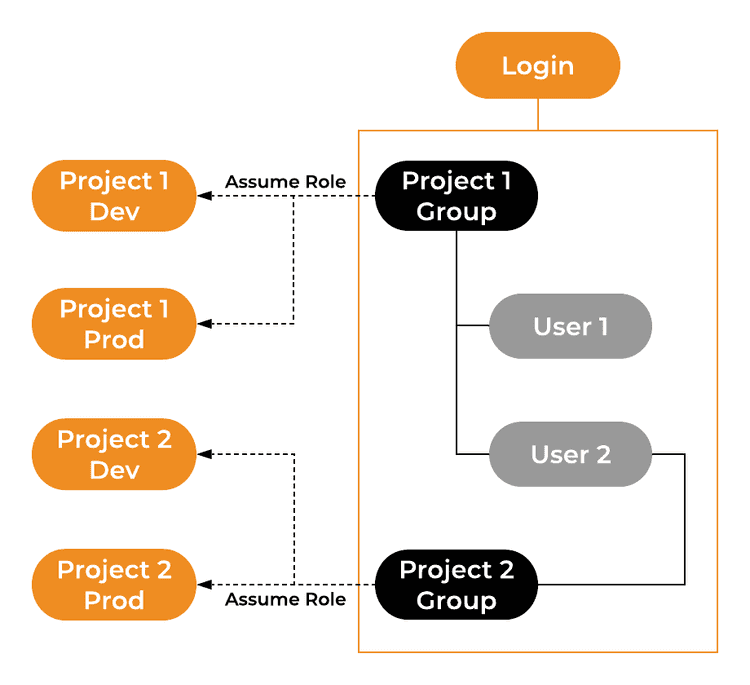

Each developer has an IAM User which is able to assume an IAM Role in other accounts. This way we can manage the IAM Users with code and our developers have to remember just one additional password. Each user can create an access key and an MFA token which is required when assuming roles in other accounts with the AWS CLI.

3. Access rights

Since we are using IAM Roles to access different AWS accounts, each user needs to have an IAM Policy allowing an assume role API call to specific roles based on the current project assignment. A best practice is to use IAM Groups to assign such permissions. Each project has a corresponding IAM Group with an IAM Policy granting assume role permission on the IAM Role in that specific project account. Based on the current assignment, we just need to add each developer to the respective IAM Group.

As you can see from the example User 1 is a member of Project 1 Group and has access to both Project 1 Dev and Prod accounts. Whereas User 2 is a member of both project groups and has access to all accounts.

We use this method as it's easily achievable with code either for ST6 owned AWS accounts or customer-owned accounts.

Using IAM Roles to access customer accounts is also safer and more convenient for our clients too as they just need to create a single role with the necessary permissions and create a trust relationship to our Login account.

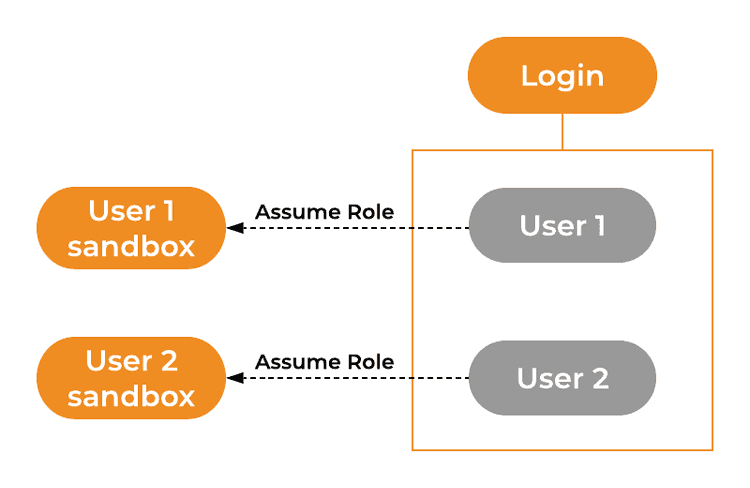

4. Individual sandbox environments

We want to give our developers individual sandbox accounts so that they can test services and continuously improve their skills. Having such accounts creates a safe environment where people could experiment and level up their cloud knowledge.

Each user has an in-line policy that grants access to the user's own sandbox.

Again Terraform aids us with this. We use code to create a sandbox per developer and grant the appropriate permissions.

One thing to point here is that you should implement some sort of cost monitoring on those accounts. You can use AWS Budgets or Billing Alarms, which can trigger some sort of automation that will stop or delete resources. You might want to implement some policy on the sandboxes so that only certain regions and services are available.

At ST6 we trust each other and we prefer not to limit innovation and creativity, so we just monitor the cost and send notifications.

5. Cost separation

In my previous blog post I talked about cost separation. The solution is to create separate accounts for each project, product or environment. We want to be able to see precisely how much our projects and project environments (if such exist) cost.

Our solution is to create OU per project so that we can apply the necessary SCP on it and place all accounts (or account) in it. Then we can easily see the cost of each project and project environment.

6. Logging archive

It's good practice to export logs containing security and compliance information to a different account. If you store your logs only in the account where they are created, you risk having them deleted by mistake or intentionally in case of a breach. A central archive guarantees you have a copy in a safe place. Some companies go one step further and set this archive as a standalone account outside of the organization and replicate the logs to at least two other regions.

We chose to use CloudTrail for organization as it's straightforward to implement and it covers our immediate needs. Please note that organizational trail can be created only in the organization's master account.

7. Central security and compliance

Security and compliance are very important topics. It is a good practice to have an account that is dedicated to this. AWS can help you achieve proper governance with services like AWS Config and GuardDuty. They are good candidates to enable in such account.

AWS Config can be used to asses your current estate against rules that you define. The findings are stored and you can check historical data. You can use the integration between AWS Config and AWS Organizations to ease your deployment. Keep in mind that this means the aggregated view will be in the organization's master account. It might be better to have this set up in the security account so that you limit the use of the master account.

GuardDuty is a service that uses machine learning to detect threads and anomalies in your accounts. This is a regional service, meaning that analyzed data doesn't cross regional boundaries and you need to configure it in every region that you intend to use.

All of this configuration can be done by code. Just make sure you grant access only to a subset of security and compliance experts to this account.

Our solution is to have a dedicated account for those services, though it's not yet implemented.

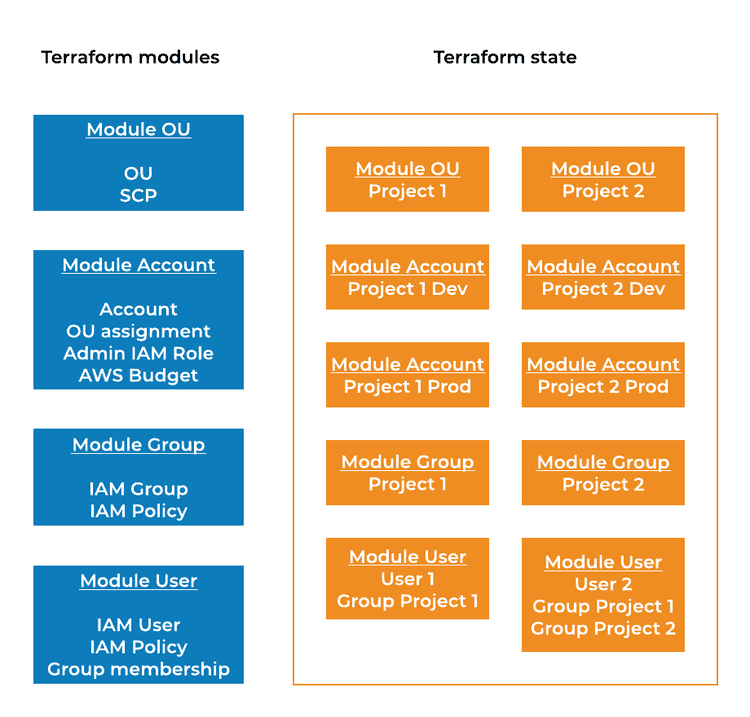

8. Ability to scale

Using code to create and manage an organization is a step in the right direction. Using declarative language like HCL is not as flexible as using JavaScript or Python, but you can and should leverage modules. Terraform modules are a good way to abstract repeatable configuration like:

You might also want to separate the state so that the organizational structure is in a different state than the IAM users.

The configuration goes through code review and a deployment pipeline. You should limit all Terraform executions to a pipeline and only for code that is approved and merged. You don't want to have race conditions between branches and simultaneous executions.

At ST6 we use Terraform modules for abstraction or repeatable configuration and AWS CodePipeline as a single point of execution.

Final thoughts

This setup covers our current needs, but as our company evolves our needs will change. We might even have made a wrong decision in the way we addressed something. "The only constant is change" so our solution will change in time as well.

Your main takeaway from this blog post should be to make sure you tailor your AWS Organization to your needs and keep it simple. Having such flexibility at your fingertips is tempting for many people to overcomplicate things.

In my next blog post, I will cover the automation side of this setup.