GitHub Actions is a powerful tool for automating software development workflows, but it does have some limitations when it comes to hardware resources. The default GitHub-hosted runners are virtual machines running on the GitHub infrastructure, which means they have predefined specifications and limitations that may not be suitable for all use cases.

GitHub Actions limitations

-

Limited processing power: GitHub-hosted runners have predefined CPU and memory limits. These limits are typically sufficient for most workflows, but if your workflow requires heavy computation or resource-intensive tasks, such as training machine learning models or running simulations, the limited processing power of GitHub-hosted runners may become a bottleneck.

-

No GPU support: GitHub-hosted runners do not provide access to GPUs. GPUs are crucial for tasks like deep learning, computer vision, and other GPU-accelerated computations. Without GPU support, you may not be able to take advantage of the full potential of your workflows that require GPU resources.

-

Specific software dependencies: GitHub-hosted runners come with a predefined set of software dependencies and tools. If your workflow requires specific software versions or custom configurations, you may face limitations or compatibility issues with the default runners.

Our problem

Our specific challenge revolves around running automation scripts on our custom hardware, which is based on an ARM chipset. These scripts encompass various tasks like smoke tests, packaging, and Docker image generation. Running them on our ARM-based custom hardware enables us to validate the software's performance, behavior, and seamless integration with the specific hardware architecture.

However, the default GitHub-hosted runners do not support our custom hardware, posing a significant obstacle. Consequently, comprehensive testing and validation become challenging, hindering the accuracy of our automation processes when using GitHub Actions.

Solution

By setting up a self-hosted runner on NVIDIA's Jetson Nano, you can leverage the Jetson Nano's AI capabilities and low-power consumption for running your GitHub Actions workflows. The Jetson Nano is a small, single-board computer designed for AI and robotics applications. It features a CUDA-enabled GPU, which can significantly accelerate AI workloads.

With a self-hosted runner on the Jetson Nano, you can perform tasks that require GPU acceleration, run resource-intensive computations, and take advantage of the Jetson Nano's hardware capabilities in your workflows. This allows you to overcome the limitations imposed by the default GitHub-hosted runners and unlock the full potential of your development and deployment processes.

Prerequisites

- Jetson Nano (In this example running on Ubuntu 18.04 Jetpack L4T 32.7.3)

- Basic knowledge of GitHub Actions and the concepts of self-hosted runners

- Basic knowledge of Docker and containerization

Step 1: Prepare the Jetson host

- Install Docker.

- Install Nvidia Runtime. NVIDIA Container Runtime for Docker (previously known as "nvidia-docker") is a software tool specifically designed to enable the use of NVIDIA GPUs within Docker containers. It serves as a bridge between Docker and the NVIDIA GPU drivers, allowing containerized applications to seamlessly access and utilize the computational power of NVIDIA GPUs.

apt-get install nvidia-container-runtime=3.7.0-1I experienced some problems with newer versions on Ubuntu 18.04.

Make sure that /etc/docker/daemon.json has the configuration that enables running the Nvidia Container Runtime.

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},apt-get install nvidia-container-csv-cudnn

apt-get install nvidia-container-csv-tensorrt

apt-get install nvidia-container-csv-cudaThese packages, are necessary to ensure that a container utilizes the underlying host's CUDA, cuDNN, and TensorRT software stack. Installing these packages results in the creation of CSV files, which can be found under /etc/nvidia-container-runtime/host-files-for-container.d. These CSV files contain the paths to the corresponding libraries, which are then attached to the Docker container by the NVIDIA Container Runtime.

In summary, these packages are crucial for establishing the connection between the container and the host's CUDA, cuDNN, and TensorRT libraries. The CSV files facilitate the inclusion of the necessary libraries within the container, enabling it to access and utilize these software components provided by the host.

- Restart docker.

systemctl restart docker.serviceStep 2: Create and build a runner image

- Create a Dockerfile.

FROM nvcr.io/nvidia/l4t-base:r32.7.1

ENV TZ=Europe/Sofia

ENV DEBIAN_FRONTEND=noninteractive

ENV CUDA_HOME="/usr/local/cuda"

ENV PATH="/usr/local/cuda/bin:/home/myuser/.local/bin:${PATH}"

ENV LD_LIBRARY_PATH="/usr/local/cuda/lib64:/usr/local/lib:/usr/lib/aarch64-linux-gnu:${LD_LIBRARY_PATH}"

ENV LLVM_CONFIG="/usr/bin/llvm-config-9"

ENV RUNNER_LABELS="self-hosted-jetson"

ENV REPO_URL='<repo_url>'

# Install dependencies

RUN apt-get update && \

apt-get install -y \

curl \

build-essential \

sudo \

# extra dependencies \

&& rm -rf /var/lib/apt/lists/*

# Add a non-root user

RUN useradd -m myuser

# The GPU (for hardware accelerated access) requires the user be a member of group “video”. This is true for both GUI and CUDA use.

RUN usermod -aG sudo,video myuser

# Set the working directory and give ownership to the new user

WORKDIR /runner

RUN chown -R myuser:myuser /runner

RUN echo 'myuser:myuser' | sudo chpasswd

# Switch to the new user

USER myuser

# Download the GitHub runner package and install it. Get the latest version from github.com

RUN curl -o actions-runner-linux-arm64-2.304.0.tar.gz -L https://github.com/actions/runner/releases/download/v2.304.0/actions-runner-linux-arm64-2.304.0.tar.gz \

&& echo "34c49bd0e294abce6e4a073627ed60dc2f31eee970c13d389b704697724b31c6 actions-runner-linux-arm64-2.304.0.tar.gz" | shasum -a 256 -c \

&& tar xzf ./actions-runner-linux-arm64-2.304.0.tar.gz

CMD ["/bin/bash", "-c", "(./config.sh --token $GITHUB_TOKEN --labels $RUNNER_LABELS --url $REPO_URL --unattended && /runner/bin/runsvc.sh)"]- Build the image. Make sure to execute this from the Dockerfile directory

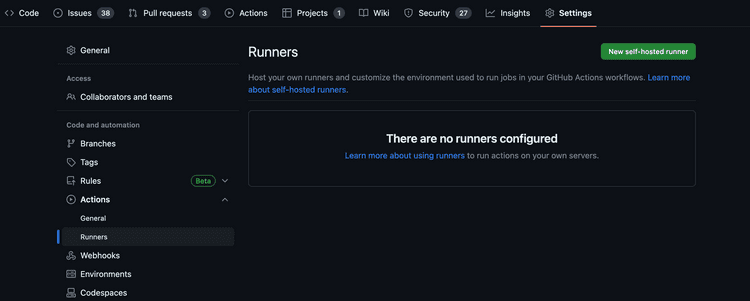

docker -t my-nano-runner .Step 3: Create a registration token from the GitHub UI

- Navigate to your repository.

- Go to Settings -> Actions -> Runners.

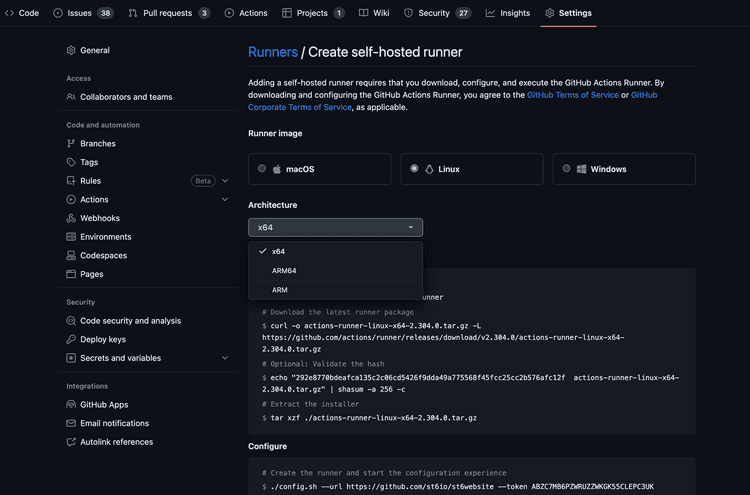

- Click New self-hosted runner in the top right corner.

- Select linux as runner image and "ARM64" as the architecture.

- Copy the token from the Configure section.

./config.sh --url https://github.com/<entity>/<repo> --token <registration token>We are using the token in the next step.

Step 4: Start a runner container

docker run -d \

--gpus all --runtime nvidia \

-e GITHUB_TOKEN=$GITHUB_TOKEN ${RUNNER_LABELS:+ -e RUNNER_LABELS=$RUNNER_LABELS} \

my-nano-runner:latest--gpus all flag: Shares all host GPUs with the container. This option enables the container to access and utilize the computational power of all available GPUs on the host machine.

--runtime nvidia flag: Specifies the NVIDIA Container Runtime as the runtime for the container. This ensures that the container can effectively utilize NVIDIA GPUs and access related NVIDIA software, such as CUDA and cuDNN. The flag can be omitted if the runtime is already configured in the docker config file.

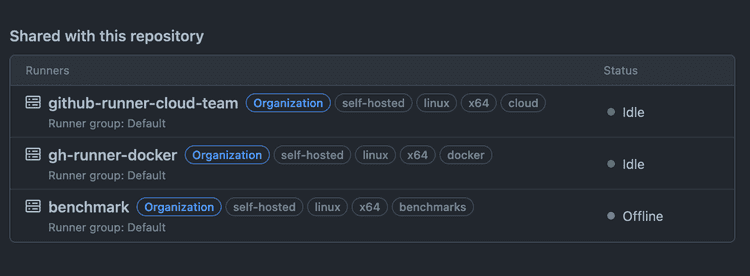

See https://github.com/<entity>/<repo>/settings/actions/runners to check the status of the runner. At this point, the runner should be idle and ready to execute workflows triggered by GitHub Actions.

Step 5: Use our runner in a workflow

Apply the runner label defined in the previous steps to use our new self-hosted runner in a workflow.

jobs:

job-name:

runs-on: self-hosted-jetsonCommon problems

- Updating runners

By default, GitHub runners are self-updating which takes them offline during the process. There is a--disableupdateflag that can be used to disable the updates but in this case the runner should be updated manually within 30 days. - Not enough space on the host

You may need to expand the Nano storage with a USB card and change the default Docker storage directory if there is not enough space available on the root storage. For example, this is usually the case with carrier boards that use eMMC storage. On these, the eMMC storage is 16GB and installing NVIDIA JetPack 4.6.3 consumes 11.6 GB of it. You can check out this article for minimizing storage usage on Jetson. - Accessing the Nano L4T image may require authentication with Docker using your NVIDIA Developer account.

- The different versions of Jetpack and Ubuntu will have their own quirks. Be prepared to dig through the Nvidia forums when the path is not smooth

Conclusion

This guide provided a basic setup for using the Jetson Nano as a self-hosted runner. For more advanced scenarios, you can explore options such as using orchestration tools to provision runner containers on-demand or using Ansible to provision different hosts with specific configurations.

Stay tuned to our blog for these exciting follow-up articles! By delving into these advanced scenarios, you'll unlock the full potential of the Jetson Nano as a self-hosted runner and take your GitHub Actions workflows to new heights.