Overview

Ameru is a startup on a mission to accelerate an economically sustainable zero-waste future. The first step in this journey was the creation of an intelligent bin that can automatically sort waste into four main categories - plastic/metal, paper/cardboard, glass, and trash (general waste). The device uses computer vision to identify and sort waste in real-time, making waste management more efficient and reducing the number of recyclable materials that end up in landfills.

Ameru wanted to build an end-to-end ML data engine to support its waste management platform, starting with data acquisition and labeling through model training, evaluation, and deployment. The data engine needed to be scalable and flexible to accommodate future improvements and changes to the system. The team should set up the infrastructure quickly using open-source tools because Ameru was looking for fast iterations and continuous learning.

High-level architecture

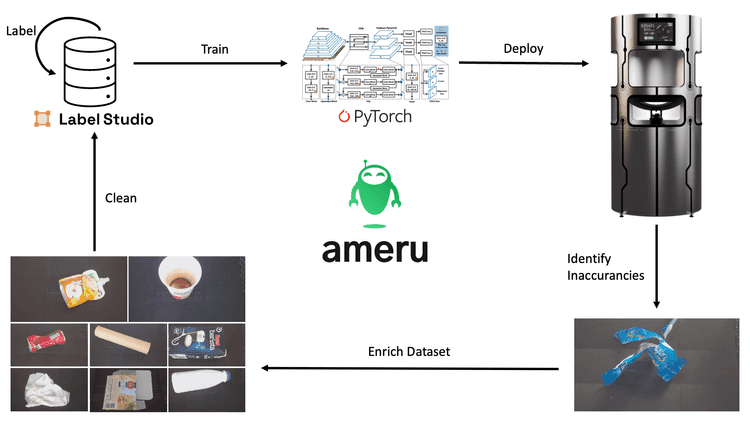

The key to a successful machine learning project is the ability to build a data engine and continuously iterate on it quickly. But what exactly is a data engine in the context of an AI project?

To illustrate what we mean by "data engine," let's take a high-level view of the one we built for Ameru:

The data engine we built for Ameru consists of five main components:

-

Data acquisition: We used Jetson Nano by Nvidia as an AI edge computing device to capture images of waste items as they were thrown into the bin. Images are used for real-time waste detection and classification and stored locally on the device for further processing.

-

Data labeling: The captured images needed to be labeled with the correct waste category so the team could use them for training of the machine learning model. We used a combination of manual and ML-assisted labeling with Label Studio, an open-source data labeling tool.

-

Model training: The labeled data was used to train a PyTorch machine learning model, which would be used to classify waste items into the correct categories.

-

Model deployment: Once the model was trained, it was deployed to the edge device (Jetson Nano) and used to classify waste items in real-time. The model was optimized for the edge using the TensorRT inference engine, which provides the best performance on the limited computing resources of the Jetson Nano.

-

Model improvement: As new data is captured continuously, we analyze all new images, and those that are not accurately predicted are added to the training dataset. The process of continuously updating and retraining the machine learning model with new "unseen" data to improve its accuracy and efficiency is known as active learning.

This last step closes the data engine loop. We need to rinse and repeat.

Let's delve into each of those steps and examine them more closely.

Data Acquisition

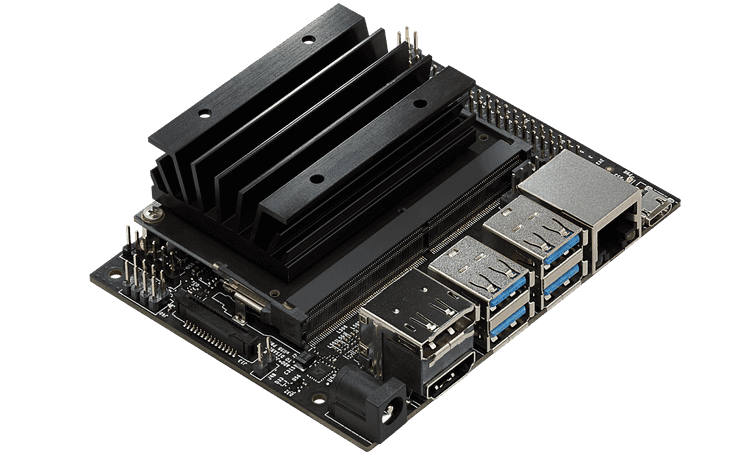

Data acquisition is the first step in the Ameru data engine. The process includes capturing images of waste items as they are thrown into the bin and then utilizing them for real-time identification and categorization of waste. For this purpose, Ameru used Jetson Nano, a small and powerful edge computing device that can perform AI processing at the edge of the network.

A high-resolution camera installed on the Jetson board captures images of the waste items as they enter the bin. The Jetson device is small enough to be mounted on the bin's frame but powerful enough to capture and analyze images in real time and with low latency. Additionally, it consumes minimal power and has a built-in video hardware decoder, which enhances its image-processing capabilities.

Every captured image is first used for real-time waste detection and then stored locally on the device for further processing. Periodically the locally stored images are securely synched to an S3 bucket in the cloud. This cloud storage is a central location for all waste images and serves as a data source for future model developments.

This data acquisition step is critical to the success of the data engine because it ensures that Ameru has a steady stream of data to use for training and improving the machine learning model. It also enables the critical feature of the smart bin - real-time waste detection and classification.

Data Labeling

Data labeling is the process of assigning a label or tag to a piece of data, such as an image, to train a machine learning model to recognize and classify that data. In the case of Ameru's smart bin, data labeling was necessary to accurately train the machine learning model to identify and sort waste items into the appropriate categories.

To accomplish this task, Ameru uses a combination of manual and ML-assisted labeling enabled by Label Studio, an open-source data labeling platform. Manual labeling involves human annotators visually inspecting each image and tagging each waste item with material (glass, paper, bio, etc.), object (cup, bottle, napkin, etc.), and condition (soiled). ML-assisted labeling, on the other hand, uses a pre-trained machine learning model to suggest labels for new images automatically.

The most challenging part of this process was the initial bootstrapping of the ML model because it required a lot of upfront manual annotations. The ST6 team was able to automate this very first training using industry best practices like zero-shot learning via GLIP models. After releasing the initial model, we implemented improvements by gradually incorporating the least-performing images into the training dataset.

Active learning is the process of iteratively improving a model based on new and "unseen" data. Setting up the learning loop was straightforward, using the built-in tools in Label Studio. It required connecting the S3 bucket with all images as a Label Studio Cloud Storage. To get the predictions in Label Studio, we deployed our pre-trained model as a Docker image in an AWS Lambda function and connected it to Label Studio as an ML backend.

Using these labeling methods, Ameru could efficiently label its dataset with high accuracy. We used this labeled dataset to train a PyTorch machine learning model, which we then deployed to the edge device (Jetson Nano) for real-time waste detection and classification. As we continuously captured new data, we added incorrectly classified images to the training dataset, which facilitated the continuous improvement of the machine learning model.

Model Training

We implemented the model training process in the data engine system using PyTorch, an open-source machine learning library. PyTorch is popular among researchers and developers for its ease of use and flexibility.

To bootstrap the initial implementation quickly, the ST6 team used popular pre-trained image classification models from the timm repository - specifically the one from the EfficientNet family. Using just image classification allowed the team to iterate quickly on the prototype without the overhead of labeling new images.

As the requirements of the product evolved, the need for an object detection model arose. Due to their high accuracy and fast inference speed, the ST6 team settled on using the YOLO series of object detection models. YOLO (You Only Look Once) is a family of object detection models that detect objects in an image or video stream with high accuracy and fast inference speed. YOLO models are instrumental in real-time object detection scenarios, such as Ameru's intelligent bin system.

As a guide, we always recommend you choose a family of models to balance speed and accuracy depending on the project's specific requirements.

We trained the models in the cloud provided by Lambda Labs, which currently offers one of the best price-to-performance ratios on the market. Furthermore, Lambda Labs provides flexible pricing options that enable users to choose the most cost-effective plan based on their requirements. Access to flexible pricing options can be particularly advantageous for startups like Ameru, which require significant computing resources but have limited budgets.

By using Lambda Labs for model training, the ST6 team was able to take advantage of their high-performance computing infrastructure and cost-effective pricing to train machine learning models more efficiently. By enabling the team to iterate faster and test more models, we were able to develop a better-performing system.

Model Deployment

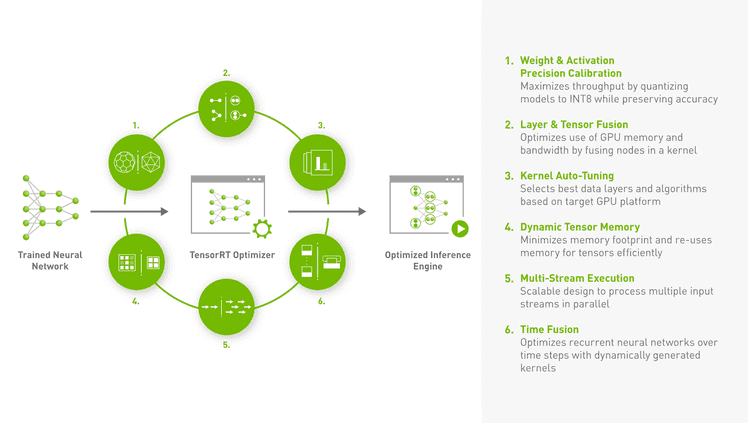

After training the machine learning models using PyTorch, the next step is to deploy the model to the edge devices, Jetson Nano. We first converted the PyTorch model to the ONNX format, an open standard for representing machine learning models. ONNX provides interoperability between different deep learning frameworks, making deploying models to other platforms easier.

To optimize the performance of the models on the Nano, we converted the ONNX model to the TensorRT format, which is a high-performance deep learning inference library developed by Nvidia. TensorRT optimizes the computation graph of the neural network for the specific hardware it will run on, resulting in faster inference times and lower latency. Low latency is critical for the real-time waste detection and classification system, where speed is essential to the product's success.

Using the Jetson Inference library, we integrated the TensorRT model into the entire bin robotics application workflow that detects and dumps waste into the correct bin.

Model Improvement

In the context of Ameru's data engine, model improvement involves the continuous iteration and optimization of the machine learning model through active learning. Active learning is a process of selecting the most informative and uncertain samples from the unlabelled data pool to add to the training dataset to improve the model's accuracy. The new data samples are then labeled by hand, added to the training dataset, and used to retrain the model. By continuously updating and retraining the model with new data, Ameru's data engine can improve the accuracy and efficiency of the waste classification system.

To ensure the continuous improvement of the model, the ST6 team employed a systematic process to evaluate the model's performance and identify the areas for improvement. We monitored the model's accuracy, precision, and recall, standard metrics used to assess the performance of computer vision models. The team also performed an error analysis to identify the types of misclassifications and the typical characteristics of the misclassified waste items. Based on the evaluation results, the domain taxonomy has been updated numerous times during training to reflect changes in the underlying data structure. For example, the team extracted frequently seen waste items like disposable cups or soiled napkins into separate categories.

In addition to active learning and performance evaluation, ST6 implemented version control and experiment tracking to manage the model improvement process. We used GitHub and Weights & Biases to manage the codebase and track the changes made to the model over time. By implementing these best practices and tools, Ameru's data engine can ensure the continuous improvement and scalability of the waste classification system while minimizing the risk of errors and regressions.

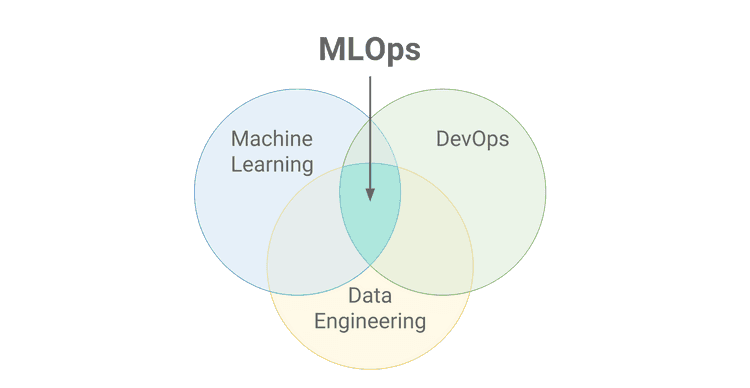

MLOps

The critical part of a successful data engine is the ability to automate as much as possible of the whole data engine. This is the place where MLOps/DevOps practices can help you integrate machine learning into the entire software development lifecycle. The process of training, deploying, maintaining, and improving an ML model in a systematic and streamlined manner is referred to as MLOps.

Monitoring model performance, improving and curating the training dataset, and adding unit tests to guard against regressions are some of the essential best practices the ST6 team implemented to speed up the development process and reduce the risk of human error.

To get this right, you must think about the data engine as an ongoing evolutionary process. Don't try to build something perfect from the get-go. Your mantra should be:

Start small, have something working from end-to-end and then iterate on it.

As the saying goes - “there is only one way to eat an elephant: a bite at a time.”

MLOps is an area where we see a lot of ML projects struggle due to the different mindsets of data scientists, software engineers, and DevOps. You need the skill set of a software craftsman that has a deep understanding of the whole stack and can reason about the system holistically.

Conclusion

ST6's approach of starting small, with a working end-to-end data engine, and then iterating on it exemplifies the mindset of a true software craftsman. This agile technique has enabled Ameru to create a practical and effective solution for waste management without the enormous upfront cost.

In conclusion, Ameru's smart bin is a testament to the positive impact of AI when applied to real-world problems and can inspire other startups and organizations to harness the power of technology for environmental conservation and sustainability.