Overview

Our client offers millions of customers a cloud-based drive for storing documents, images, and videos. However, the executive team has identified a major issue: customers can only search their image-based files (such as PDF scans, images, and videos) by file name. With over 20 GB of storage to search through, the limitation of searching by only name not only frustrates users but also presents a strategic challenge as market competition intensifies and seamless tool integration becomes increasingly important. Implementing semantic search within the platform would position our client on par with industry leaders with trillion-dollar valuations.

Solution

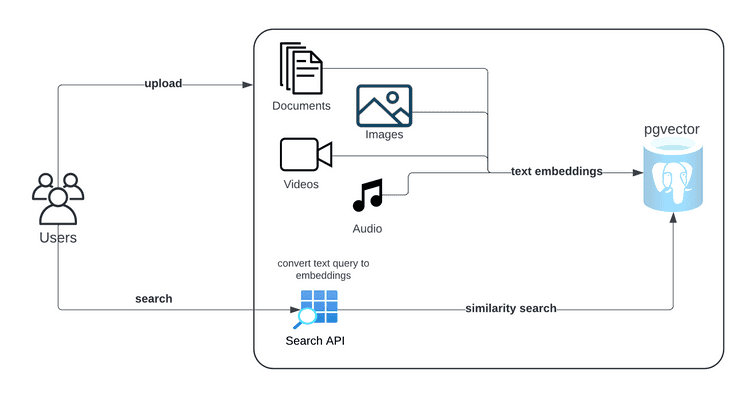

To address the search limitation, our team implemented a solution that enhances the search functionality by leveraging machine learning techniques, specifically text embeddings. The process involves generating text embeddings for each type of document, enabling a semantic understanding of the content rather than relying solely on file names.

Here's a detailed breakdown of the solution:

Text Embeddings for All Document Types

In order to support all relevant document types we have to employ different tactics to extract the text embeddings:

- For text-based documents (such as PDFs, Word and Excel files, etc.), embeddings are generated directly from the document's content using standard text extraction techniques.

- For image-based files, such as images and scanned PDFs, a multimodal large language model (LLM) is employed. This model interprets the content of the images and generates detailed textual descriptions of what the images depict. The descriptions are then converted into text embeddings, creating a semantic representation of the image content.

- For audio files stored in in the cloud drive, we have integrated OpenAI's Whisper model, a state-of-the-art automatic speech recognition (ASR) system. Whisper is used to transcribe spoken content from audio files, generating accurate textual representations of the audio. These transcriptions are then processed into text embeddings, just like text documents or image descriptions, and stored in the vector database.

- Videos are handled by extracting key frames or scenes, describing them using the multimodal model, and generating embeddings for the key visual segments. Additionally, the audio of the video can be analyzed, providing even more context for the textual representation.

Storage in a Vector Database

All the text embeddings generated are stored in a vector database, and we chose to implement this using pgvector, an extension for PostgreSQL optimized for storing and querying large volumes of vector data. pgvector allows for the storage of billions of records by leveraging database partitioning, ensuring scalability as the platform grows. The use of a vector databases is crucial because they allow for efficient similarity searches, which traditional databases cannot handle.

Similarity Search

But how does the similarity search actually work? When a user enters a search query, the system generates a text embedding for the query itself. This embedding is then used to search the vector database using cosine similarity, a mathematical technique that measures the similarity between two vectors based on the angle between them. By calculating the cosine similarity between the query embedding and the document embeddings stored in the database, the system retrieves the most semantically relevant documents, images, and videos—going beyond exact keyword matching.

Cost Evaluation

A key component of our proposal involved a thorough cost evaluation of the entire solution, particularly given the significant computational and storage resources required. Generating and storing text embeddings for hundreds of thousands of files per day is a resource-intensive process, both in terms of computational power and storage capacity. It was essential to carefully plan and assess the financial implications to ensure the solution's sustainability and scalability without incurring excessive costs.

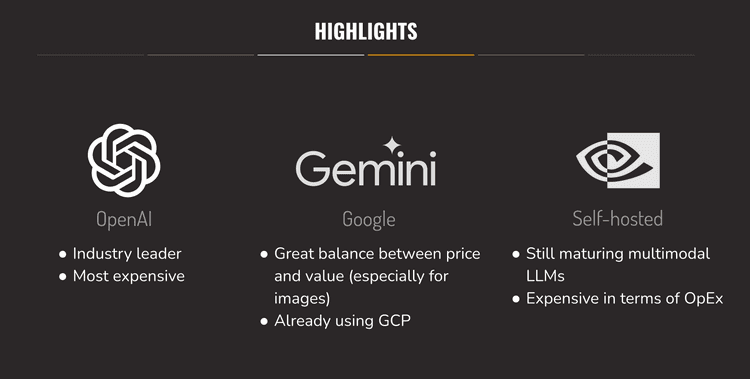

To achieve this, we developed a cost model that compared three potential approaches: using third-party services like OpenAI, Google Cloud Platform, and self-hosting the solution. Each option was evaluated based on several key criteria, including:

-

Token Pricing: The cost per token or API call for generating embeddings or transcribing media files was a critical factor. We examined how each provider charges for the volume of data processed, as well as how these costs scale with increased usage.

-

Visual Understanding Cost: This metric looked specifically at the cost of generating detailed descriptions for image-based content, which requires more computationally expensive multimodal models. We analyzed how each provider handled the pricing for image-based processing tasks, including the use of large language models for visual understanding.

-

Text Embedding Generation Cost: Since text embeddings are central to our solution, we evaluated the cost of generating these embeddings for both textual and non-textual data, including images, audio, and video content. This comparison helped us determine the cost-effectiveness of each option as the volume of data processed grows.

After careful analysis, Google's Gemini Flash model emerged as the most cost-effective solution for our needs. It provided an optimal balance between price and performance, offering the best results in terms of both cost efficiency and the accuracy of the embeddings and visual understanding. Google's infrastructure also allowed for high scalability, making it a more sustainable option for our growing data demands. As an added benefit our client has already been utilizing GCP for their cloud needs.

Self-hosting, while providing more control over operations, proved to be significantly more expensive due to the infrastructure and maintenance costs, especially when scaling up to handle large volumes of data. OpenAI offered a competitive solution but was ultimately more costly compared to Google in terms of token and visual processing prices, particularly for large-scale operations.

We have reevaluated our model a couple of times during the discovery period, because all cloud providers more than once cut their costs significantly.

Ease of Integration

One of the key advantages of this solution is the minimal effort required for integration with the existing Cloud Drive infrastructure. The implementation has been designed to seamlessly plug into the current system, allowing the existing team to quickly adopt it without significant changes to the overall architecture.

The integration process primarily involves two straightforward tasks:

-

Querying the New API: The Cloud Drive team will only need to update their existing workflows to query the new API for search functionality. This API is built on top of the current Cloud Drive web API, ensuring compatibility and a smooth transition. The team can easily query the API to retrieve semantic search results for documents, images, videos, and audio files, leveraging the new embeddings-based search without having to overhaul their current systems.

-

Implementing Webhooks: The second step involves setting up webhooks to notify the system when documents are added, edited, or deleted. This ensures that the text embeddings and vector database stay in sync with any changes in the file storage. By sending events through webhooks, the team can keep the system updated in real-time, triggering the necessary operations to generate or update embeddings without manual intervention.

These two simple integration steps—querying the API and implementing webhooks—allow the product to benefit from the advanced semantic search capabilities with minimal disruption to their existing operations. This approach not only saves time but also reduces the complexity of deployment, making the overall transition smooth and efficient.

Conclusion

In conclusion, the proposed solution significantly enhances search capabilities by enabling efficient, semantic searches across all file types—documents, images, videos, and audio. By leveraging text embeddings, advanced multimodal models, and vector databases like pgvector, this system provides a robust, scalable platform that will improve user experience and keep our client competitive in a rapidly evolving market. The integration process is straightforward, requiring minimal effort from the existing team, which ensures a seamless transition to the new functionality.

Looking ahead, the system offers immense potential for future extensions. With the embeddings already in place, we can easily integrate a document chat feature, allowing users to interact with and ask questions about documents in natural language. This feature would provide instant, context-aware responses based on the document's content, adding significant value to the platform.

Beyond document chat, the system can also be extended to support intelligent document summaries, where users can receive concise summaries of large files or even collections of files. Another possible use case is recommendation systems that suggest relevant documents based on users' past behavior or search queries, further personalizing the experience.

This solution not only addresses immediate need for enhanced search capabilities but also positions the platform for future growth. By delivering a superior user experience and paving the way for innovations like document chat and intelligent summaries, Cloud Drive will be well-equipped to lead the market, improve customer retention, and drive higher engagement.

Read more about our experience with AI.

Do you need an AI expertise at your company?

Check out the AI services we offer and don't hesitate to contact us for a free consultation.